Normalization of data

In this post, I will cover two of the most popular techniques for normalization of data. Normalization is a very important data pre-processing step for improving performance of many machine learning algorithms.

For this purpose we will use the popular ‘Iris’ dataset. We will load the dataset from the ‘datasets’ package by using the command : data(iris). Next up, we will assign this data to another dataframe called data, which will save the normalized data points as we move through the code.

Z-score normalization:

In this method, the features are rescaled so that they have the properties of a standard normal distribution with the mean equal to zero and unit variance around the mean. The resultant values are more commonly known as z-scores and are computed as follows:

Here’s the R code:

data(iris)

data<-iris

for(i in 1:nrow(iris)){

data$Sepal.Length[i]<-(data$Sepal.Length[i]-mean(iris$Sepal.Length))/sd(iris$Sepal.Length)

data$Sepal.Width[i]<-(data$Sepal.Width[i]-mean(iris$Sepal.Width))/sd(iris$Sepal.Width)

data$Petal.Length[i]<-(data$Petal.Length[i]-mean(iris$Petal.Length))/sd(iris$Petal.Length)

data$Petal.Width[i]<-(data$Petal.Width[i]-mean(iris$Petal.Width))/sd(iris$Petal.Width)

}

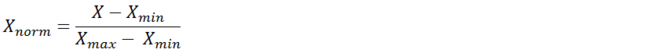

Min-Max Scaling:

In the most simplistic implementation of this method, the features are scaled to a range between 0 and 1 using the following equation:

Here’s the R code:

data(iris)

data<-iris

for(i in 1:nrow(iris)){

data$Sepal.Length[i]<-(data$Sepal.Length[i]-min(iris$Sepal.Length))/(max(iris$Sepal.Length)-min(iris$Sepal.Length))

data$Sepal.Width[i]<-(data$Sepal.Width[i]-min(iris$Sepal.Width))/(max(iris$Sepal.Width)-min(iris$Sepal.Width))

data$Petal.Length[i]<-(data$Petal.Length[i]-min(iris$Petal.Length))/(max(iris$Petal.Length)-min(iris$Petal.Length))

data$Petal.Width[i]<-(data$Petal.Width[i]-min(iris$Petal.Width))/(max(iris$Petal.Width)-min(iris$Petal.Width))

}

That’s it on Normalization of data for now, and we will see this applied in the upcoming blogs soon.

Sanket